UX Design for Agentic Systems

Do tools like Figma solve the core design challenges of AI applications?

Most mainstream product design tools like Figma & Sketch treat user interface design as the atomic unit they are built around. Mockups & visual components are the basis of these tools, and are typically the mechanism by which other interaction design concepts like user intent modeling & user state transitions are conveyed.

This paradigm makes a lot of sense for SaaS products where each user intent or workflow maps directly to a specific UI. If you are a CRM and you want to support a workflow where the user can build automations on a list - you build a user interface for that. If you are a stock trading app and you want to let people buy options - you build a user interface for that. In effect - interaction design is done implicitly by laying out user interfaces.

Traditionally, it would have actually been quite difficult to imagine a piece of software that did not follow this paradigm. How can you support a wide array of user workflows, intents, and states with a singular UI?

Yet, if you look at the emerging landscape of AI-native tools, especially those “selling the work” by automating what is today done by humans or services firms, this dynamic has changed. Many such products are quite UI light, but extremely high in complexity of reasoning about user state and interaction patterns.

Exploring an example

As an example, let’s consider Mindy, an AI “chief of staff” assistant that operates entirely over email. You can ask it anything in natural language over email and it will get back to you and try to help.

In one sense, this product has little to no user interface surface area - it is just a set of emails! From a visual and UI design perspective, a designer for such a product might sketch out different email text layouts in Figma for different use cases, but there is ultimately not a lot of work to be done there.

Yet, on the other hand, a product like Mindy has immense UX design challenges that are, in my opinion, actually far more difficult to deal with than a typical SaaS product. These design challenges stem from: 1. Needing to mimick the behavior of a human chief of staff that can respond to a somewhat arbitrary set of long tail questions and 2. The probabilistic nature of AI systems.

You might imagine that a UX designer for Mindy needs to think through the following sorts of things:

What class of questions are people likely to ask Mindy?

What types of questions should Mindy attempt to respond to vs. not?

Should there be specialized, more “productized” workflows for responding to head queries (e.g. perhaps meeting scheduling and research are the two most important use cases)?

What should Mindy do in cases where it is not confident it can answer?

How should Mindy handle various forms of user followups or responses? What could these look like?

Most of these challenges tend to revolve around user state modeling - what states can user be in, how do we classify each of these states, how should the product operate in each of these states, what state transitions should we model out, and what is the user experience we want to deliver in each state? These are the core design principles that would then guide an engineering and product team on how to architect the LLM system.

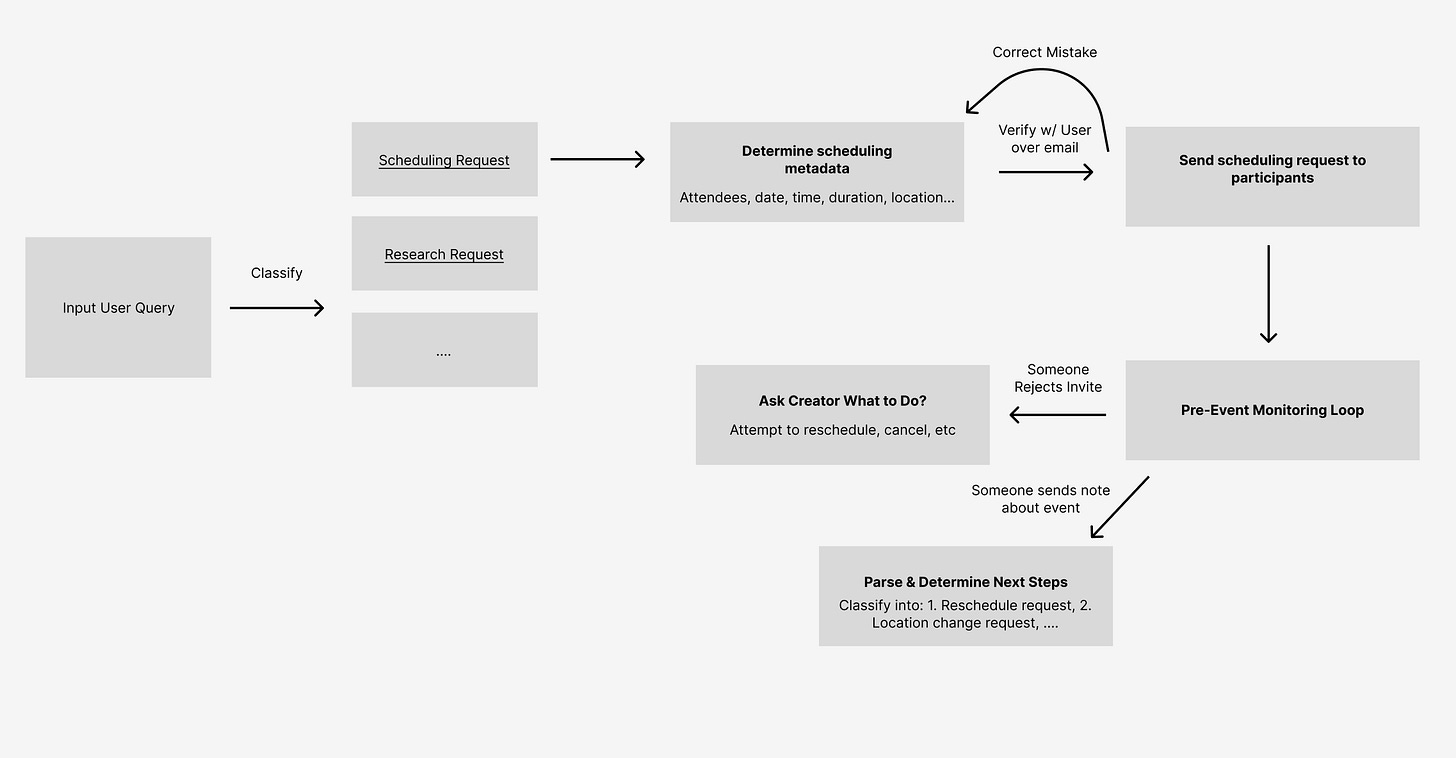

As an example - if the most important head query to focus on for a product like Mindy is scheduling, it is likely that you then need to design the system to first classify the incoming email, then move into a series of very specific steps to execute a scheduling request via LLMs such as: 1. Collecting key scheduling metadata such as attendees, date, time, location, 2. Confirm scheduling request with user, 3. Sending the event invite to participants, 4. Monitoring/polling for event rejections or rescheduling requests by attendees, etc.

A good UX designer would probably go 100x deeper on this flow than I did above, modeling out all these user states and how to handle them. While each of these sub-states and state transitions likely involve an LLM or small agentic system in some capacity (email classification, email understanding, email sending, etc), the overall state flow needs to be more precisely defined to create a good UX in the vast majority of cases.

And while there is certainly some visual design that needs to accompany this sort of state modeling (e.g. email templates for sub-steps), I would argue that 90% of the design work at hand is more so this form of user needs modeling and designing workflows that fit user expectations.

I would argue that this dynamic fundamentally breaks a lot of the design assumptions that products like Figma are built around. While you can, of course, do this sort of higher abstraction workflow mapping in a tool like Figma - indeed, I made the above image in Figma - there are probably a different set of primitives you might build out to really optimize this sort of design task. Stately is an interesting example of what a more state-oriented design approach might look like for this.

The shifting role of UX designers in agentic companies

Complex logic flows and state diagrams decoupled from visual design are not necessarily a completely new thing - Slack’s push notification decision making logic is a fun, older, non-AI related example of something similar. But I think the difference is that in many agentic products, this now becomes the core UX design challenge of building the product all together.

If you are Cognition - how do you model out all the steps and sub-steps that the system might take to build a large software engineering project on its own that is sufficiently understandable to the user and fits a user’s mental model? If you are Dosu, how do you navigate the right way to respond to the wide array of ways someone might ask you questions or respond to you in a Github issue?

In my experience - people building agentic systems like this actually see the biggest improvement in reliability, quality, and performance by doing this form of domain & user modeling and thinking through more structured state transitions. Hex, for example, saw some of its biggest improvements in Hex Magic when they started to much more tightly constrain the agentic control flow - enforcing things like the # of cells that could be output & the relative order of SQL vs. Python vs. data visualization cells. In essence - they more tightly modeled the state flow of the system.

Ironically, while getting the UX and interaction design correct in these systems is one of the hardest things to do well (and one of the most important things to get right), hiring UX designers in these companies is almost impossible, because the UX job to be done is so different from most SaaS apps. Less work needs to be done on UI, but way more work needs to be done on building mental models of user behaviors and state modeling of users. And that work can no longer implicitly be done by just mocking out visual workflows.

The job of a designer in a product like this is also more technical. There is a much tighter coupling between technical system design and interaction design. Proper UX design of agentic systems really requires a somewhat in-depth understanding of AI system design - which is why, today, most of this work is done today by CEOs or engineering leads.

In a way, it feels like interaction design for products like this is fundamentally different in some ways than what it has looked like before. I wonder if this may lead to very different design workflows, design tools, and team dynamics between UX designers and engineering & product in agentic companies.

As someone who's always been more interested in mental models than in UI polish, I love this examination. It brings to mind some of the issues around conversational design as an emerging field in the previous decade; for many teams, it required an expanded understanding of the role of a designer.