State of Foundation Models - 2025

A holistic overview of everything from model research to product building

I recently put together an extensive, 100+ slide presentation covering the state of the foundation model market in 2025. You can find it at foundationmodelreport.ai.

I also put together a live presentation of a condensed version of the slide deck here, if you prefer listening vs. reading.

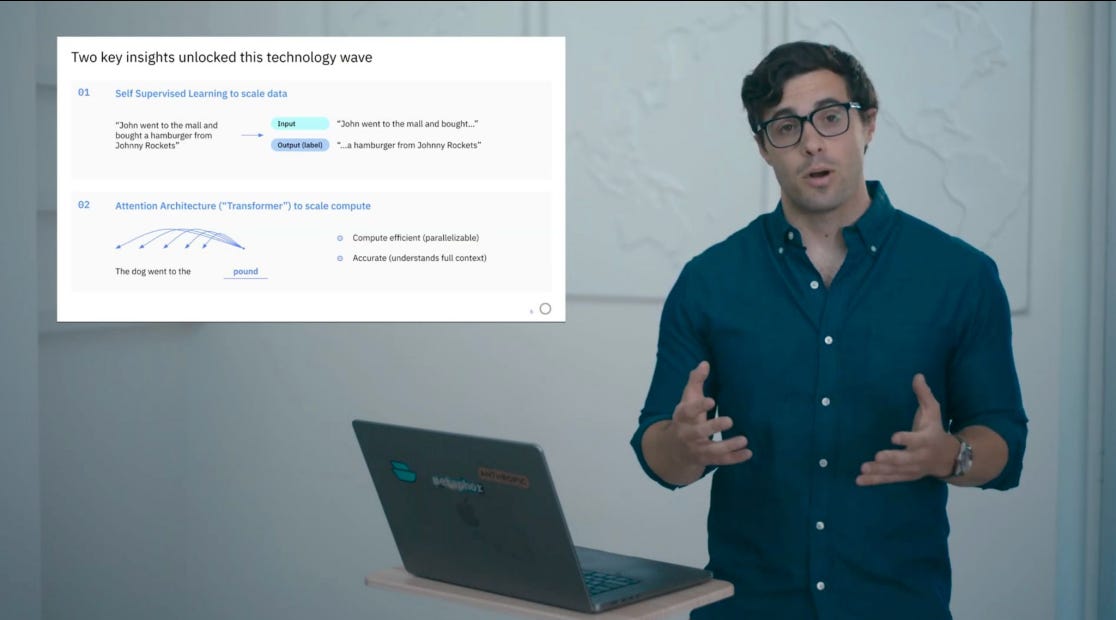

You can think of this as a spiritual successor to my 2023 “Foundation Model Primer”. I aim to recreate a lot of what people liked about that presentation - particularly how it more holistically covered the space end-to-end.

Some of my favorite tidbits from the deck this year:

Generative AI has gone mainstream - 1 in 8 workers worldwide now uses AI every month, with 90% of that growth happening in just the last 6 months. AI-native applications are now well into the billions of annual run rate

The pace of research progress is wild — the set of tasks an AI model can reliably do is doubling every ~7 months, the cost for a given unit of intelligence is going down >100x year over year

Training costs balloon, but OSS convergence continues - A typical model now costs >$300M to train, but also only stays a top model for about 3 weeks

Substantial progress in “newer” modalities - We are at a ChatGPT moment for video models. Science models in areas like protein folding & materials are starting to get interesting. Robotics models, world models, & voice-to-voice models are improving rapidly.

The venture frenzy is intense - 10% of all venture dollars in 2024 went to foundation model companies, and 50% of venture dollars in 2025 have gone to AI startups

Reasoning models are the new scaling frontier - As a result, tons of focus right now on verifier models, reward models, and reinforcement learning. Are we going to see quality generalist reward models?

Agents are starting to work, but design patterns & architectures are still so early - Model pickers are like picking your web video codec in 1998. “Systems” paradigms like sequential sampling or fan-out/fan-in are under-appreciated and will become more mainstream.

MCP is exciting, but the agent-computer interface for tool use underrated - Many good startups I know forgo MCP for purpose-built integrations as a result.

Good data curation, retrieval, and evals are still under-appreciated - For example, a well architecture RAG system is 10-100x better than a long-context only model in latency, quality, and cost for even straightforward use cases.

And honestly - that’s just scratching the surface! Would love to hear what you think — and if you find it valuable, feel free to share it with others building in the space.