All agents will become coding agents

Code generation will be the most ubiquitous tool for AI Agents

Yesterday, Anthropic launched Claude Cowork, a new consumer product experience that helps you get work done by putting a rich UI on top of Claude Code. Anthropic themselves admits that this came as a result of seeing countless Claude Code power users using Claude Code for things that have nothing to do with software engineering - like managing your personal todo list or handling your emails.

It turns out that the “LLM + Computer” agent paradigm that Claude Code pioneered, where an LLM has access to a file system, a bash terminal, code generation, and similar linux computing primitives is exceptionally powerful - regardless of whether you actually need to write code as part of your actual task.

I increasingly think it is likely that all agents will move towards this architectural design pattern. In other words, all agents will become coding agents.

This post will explore early examples of this across different applied AI startups, discuss reasons for why this architecture is so powerful, and explore its downstream implications on startup opportunities in applied AI and AI infrastructure.

Code generation as a universal tool

Why does code generation matter for non-coding agents? Lets begin by exploring the common set of reasons why code generation is so effective for non-software-engineering agents.

Code as a reasoning layer

The vast majority of AI startups need to do nuanced, mathematical reasoning of some form as part of their core function. AI accounting startups need to do spreadsheet manipulation. AI financial research startups need to be able to pivot and filter financial data. AI document processing startups need to manipulate numerical data extracted from tables and aggregate/summarize it. AI research scientists will need to analyze research results.

Doing this type of precise reasoning in token space is very, very unreliable due to how language models tokenize language. What is much more effective is treating all numerical manipulation as a code generation problem, similar to AgentMath.

Code as a tool-calling layer

Traditional tool-calling paradigms (ala ReAct) have the LLM repeatedly process the context, identify the next tool to use, run that tool, and repeat.

This is both inefficient (since it requires calling an LLM for every tool call) and more prone to mistakes than to ask the LLM one time to produce code to run a sequence of tool calls that allow the agent to make progress.

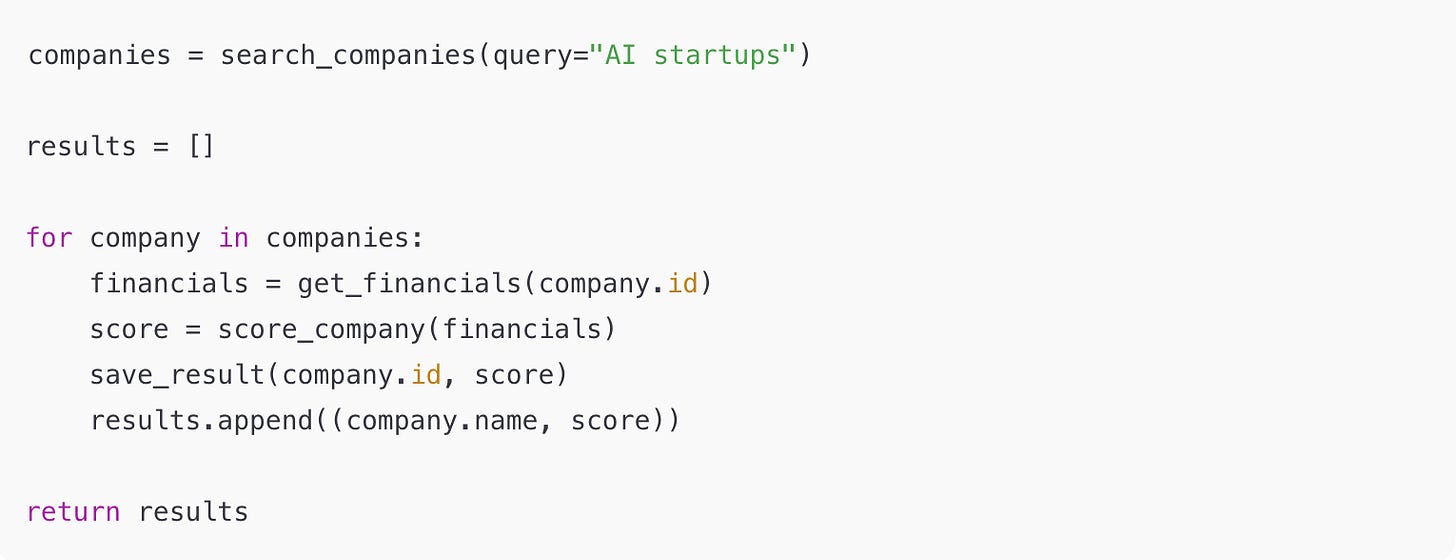

For example, imagine an agent is tasked to synthesize a bunch of financial records, and has access to a company search tool & a fetch financials for company tool. What you want to do is:

Search for all companies

For each company, fetch financials

For each financial record, compute a score

Save the result

This task is much more effectively achieved by having the LLM write the code to execute this loop once, and to then procedurally execute the loop, rather than having linearly call tool > reason about next step > call tool > reason about next steps etc.

This blog post is a great deeper dive on this concept, and this recent post by Replit reflects the same idea. Claude is moving its default tool-use paradigm to Claude Skills, which stores tools in a file system which can be found via bash and file system commands, is a direct reflection of the market moving in this direction.

Code as a context management layer

Computing environments provide a powerful substrate for dealing with context management. This video and blog about context engineering in Manus are some of the best illustrations of this that I have seen.

Manus stores context in the filesystem of a computing environment, utilizes bash commands & file paths to progressively disclose context and tools, and decomposes most user tasks into some combination of: 1. Web access, 2. Code generation, 3. Context search, 4. Computing utilities (bash, file system, etc).

The reason this works so well is that most context & tools are, by default, hidden from the LLM at any step, but the LLM always has access to a few utilities (e.g. bash commands) that allow it to progressively disclose data or tools it may need. This avoids context rot, and also saves you a lot of cost and latency thanks to minimizing token usage.

Note that using code for tool-calling is a particularly important version of this. Loading up a ton of MCP servers completely overloads the context window of most models, but having a small set of composable tools + code as an orchestration layer allows this to be avoided.

A lot of research work is now taking these ideas further. For example, RLMs explore an agent architecture where the context is represented as a variable in memory that is not shown in any way to the LLM unless it specifically asks for it using various tools (grep, peeking, etc).

I think this will become the predominant pattern for context engineering - treat context as data that can be dynamically accesses via a small set of powerful primitives (e.g. search).

Code for universal interoperability

The most effective AI products meet you where you are, allowing you to input data in any way (e.g. upload any file type) or integrate them into whatever systems you use. However, it is difficult if not impossible to pre-build every integration all your users may want, or a custom tool for any kind of input the user may provide.

What can work - though - is allowing your agent to write code to dynamically process any input, write any last mile integration, or do anything on the web (via browser use) or on your computer (via computer use).

A simple, but illustrative, example of this is how recent advances in code generation models have suddenly allowed an influx of new “AI Copilot” products to be built that help automate IDE-like products that lack robust plugin or extension ecosystems. You can’t build a Cursor-style product for tools like Adobe Premiere, Adobe After Effects, Solidworks, Ansys, and Cadence because these tools are not open source and don’t have fully expressive extension ecosystems, but they all have scripting languages one can write code against.

It is almost a truism that AI products are more effective the more data that they can reference, and code generation is the best way to allow maximum flexibility on data ingestion.

Code is infinitely expressive

ChatGPT used to have specialized pan & zoom tools to manipulate images that users upload. Now, it just writes code to do image processing, which is a much more flexible architecture because the system can do far more than only panning or zooming - but rather achieve the vast majority of image processing tasks.

If you want your agent to be able to do almost anything your users want, the best way to achieve it is to allow the agent the write code. It is near impossible to fully enumerate all the tools or capabilities a given agent should have in any domain, and so having code as a fallback almost always improves agent quality.

Code generation for ephemeral software

Everything we have discussed so far has more to do with the internals of how an agent thinks & processes data. However, I also think code generation has a lot of value as a UX paradigm for interacting with agents.

While natural language interfaces for agents will not go away, there is clear value in having structured UIs that complement or augment natural language. And the best way to achieve this is to allow the agent to dynamically write ephemeral, last-mile software for the user as it does its job.

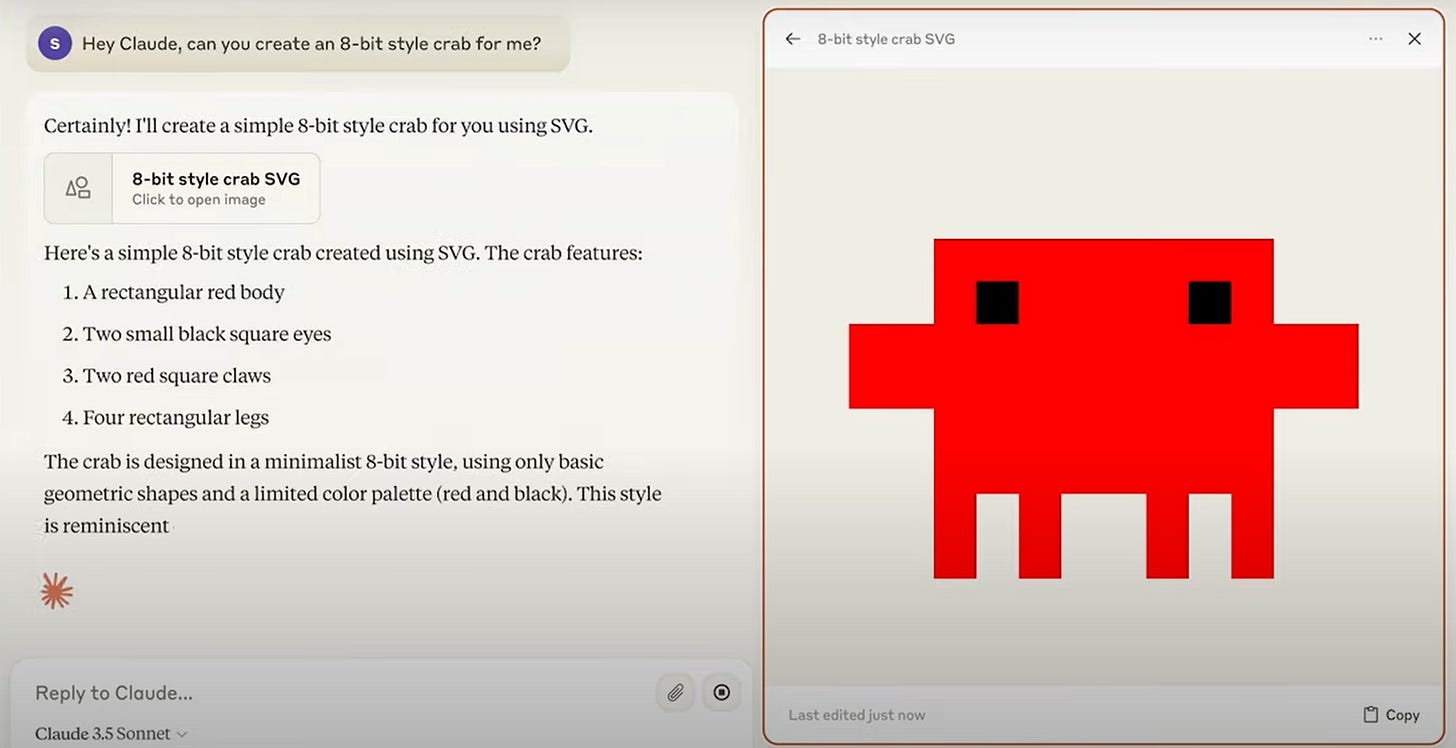

Claude Artifacts was the first great example of this, and you now see this pattern of “conversation on the left, ephemeral UI on the right” becoming much more common in consumer AI products.

I suspect that all agents will end up benefitting massively from this, because regardless of what type of user your AI product serves, they will benefit from having micro-apps and structured UIs to interact with.

_

The TLDR of the above is that - making the core of your agent a coding agent with access to a computing environment gives you such a powerful baseline that it is hard to imagine not doing it at this point.

Indeed, one of the biggest themes I see right now amongst AI startups are “Claude Code Wrappers” going after first-wave RAG/agent startups. In many cases, this strategy actually allows you to build a superior product in weeks thanks to all the benefits of code generation architectures. Claude Code taking so much market share from Windsurf/Cursor/etc despite being a random side project at Anthropic initially is a great example of this - the “LLM + Computer” agent paradigm was so powerful in of itself that it outweighed years of feature development.

I’d love to, for example, see a startup apply these ideas to “Deep Research” as a category. I think you could almost immediately build a 10x better deep research product than what OpenAI & Gemini currently offer by:

Storing all primary data (e.g. web search results, scraped web data) in a file system for ongoing access & context management. Today’s deep research products discard this data, when instead they could create a dynamic data lake associated with the research report that lets you continue to query/process the data without needing to restart the web search.

Using code for mathematical manipulation. Almost all deep research involves numerical manipulation & data analysis. This should be treated more like a code generation problem

Making deep research outputs dynamic, living artifacts rather than just static word documents - think a lightweight javascript app rather than a word document.

Using code generation to enable additional functionality for data ingestion - e.g. allow me to provide auth credentials or an API key for the deep research agent to write code to login private/proprietary systems and combine that with public web research

Downstream implications

Let’s presume that everything I have said thus far plays out, and all vertical agents start to make heavy use of code generation. What is the impact of this, and what opportunities might emerge from it?

Computing sandboxes become a default agent primitive

The first, and most obvious implication is that computing sandboxes for agents become a universal infrastructure need.

If every agent writes code, then every agent needs to isolate untrusted code execution, and every agent needs access to a filesystem and a terminal. Manus’s architecture revolving so heavily on e2b is illustrative of this.

Today, sandboxes are mostly used by pure-play code generation agents, but as more of the market moves to code-centric architectures, more AI startups will need to adopt tooling in this space. I suspect that the computing sandbox will be as universal of a need as search/retrieval engines like Turbopuffer, Chroma, & LanceDB.

While it will certainly be possible to DIY an agent sandbox on top of k8s containers or MicroVMs, I suspect best-in-class products will end up winning, analogous to how few serious AI startups simply use PGVector or FAISS for retrieval. There is a lot of room for technical innovation in this space across:

Virtualization - e.g. cold start times, isolation boundaries

Distributed systems - e.g. dealing with persistent state, syncing to remote state, cross-sandbox state, rapidly attaching large state to the VM

Environment definition/harness - e.g. what are all the right tools to expose in a sandbox and the right abstractions. Feels like file systems and git are particularly rich areas

Features/ergonomics - e.g. passing data in/out of sandbox, remote view of sandbox, etc

While there are already a number of products in this category - both specialized startups (Modal, e2b, Daytona, Runloop) and offerings from large cloud vendors (Cloudflare, Vercel) - my feeling is that the market here is still very early and there is a lot of room for further innovation.

I also think it’s possible that the idea starts to extend beyond "sandbox” to “cloud” - e.g. each agent has access to its own cloud account with a range of computing primitives (VMs, Queues, Databases, Object Storage, etc) rather than centering around just the VM. Pertinent tweet here.

A new SDLC stack will emerge for ephemeral code

If all agents begin to write large amounts of “ephemeral” code for reasoning, context management, function calling, and last-mile micro apps, we will invariably start applying many the same concepts that exist in normal software development to this code, including version control, unit tests, integration tests, code review, CICD workflows, and more.

I think this will end up looking like a high performance, “headless” Github that rhymes with existing human-centric SDLC workflows, but differs substantially in a few key ways, such as:

Performance - You’ll want to be able to run an end-to-end CICD pipeline for the code in the span of seconds (or less)

Automation - The end-to-end workflow will need to be fully automated, and not contingent on human triggers or involvement

Git - I think you can probably rethink many aspects of VCS for this use case. For example, you likely want a VCS paradigm where every change is a commit, that natively supports semantic diffs, and that allows for extremely parallel branching & conflict resolution. You may also want to store agent trajectories in git (Meta now does this) and use a storage format that allows for better search & OLAP style queries over the git history for the agent to understand what was tried previously.

API Design - My guess is many aspects of the Github API would be done differently for this workflow. For example, you probably want much more flexibility and control over what verification or review layers are applied to each piece of code, rather than a “monolithic” CICD pipeline. Some core concepts may also benefit from being modified - for example I am not sure the “repo” as the core unit of work is still the right idea given agents will write a lot of very small/micro code chunks.

Consumer Facing UI - You may end up wanting some kind of UI component that allows the end user (e.g. the user of the AI agent product which writes ephemeral code) to somehow visualize or understand or modify the code that was written or used, especially for any ephemeral UX components created

A few companies are early in exploring ideas here like Relace and Freestyle, but I think there is a lot more to be done.

Specialized “computing environment” tools

My guess is that there will be startup opportunities to build best in class version of each major “computing environment” tool. For example - if file systems remain one of the marquee components of agent architectures, then what would a file system offering built from first principles for agents look like?

I think most of these opportunities will look like open sourcing a free library that can be included in whatever sandbox provider or offering you are using, and then monetizing a cloud layer on top of that that is needed for multi-agent systems, very long running agents that might pause & resume, and/or cases where the agent must manipulate data that exceeds the memory constraints of the sandbox.

Successful teams will do a lot of work on harness engineering for that tool, ensuring that the abstraction is optimal for agent quality. The benefit of using the specialized provider will be this plus the cloud sync distributed systems layer.

Beyond file systems, I think this might also apply to OLTP databases, OLAP databases, search engines, durable execution/parallelism/threading, and git.

_

If you are applying these architectural ideas to vertical agent categories, or building infrastructure centered around these ideas, I’d love to talk to you - reach out at davis @ innovationendeavors.com